Virtual Workshop - Data Management: From Instrument to First Storage

|

|

|

The data lifecycle for a majority of the U.S. National Science Foundation (NSF) Major and Mid-scale Facilities starts with data acquisition and capture from scientific instrument(s), e.g. telescopes, sensors, field devices, spectrometers, accelerators, interferometers, magnets, lasers, to name a few. Quite often, the data acquisition step and the associated data management practices at the facility to capture this initial data dictate the effectiveness of the latter parts of the data lifecycle.

This virtual workshop, organized by the NSF CI Compass, the Cyberinfrastructure Center of Excellence, will explore this crucial first step and focus on the topic of: “Data Management: From Instrument to First Storage”. The workshop speakers, representing a diverse set of NSF Major and Mid-scale facilities, will discuss the challenges, best practices, potential solutions, and open issues for handling the data flow from facility instrument(s) to first storage, including issues of metadata tagging, enabling FAIR (Findable, Accessible, Interoperable, and Reusable) attributes, data storage, on-the-fly control, incorporating user-specific instruments, managing streaming data, choice of storage and database technologies, etc.

This was a virtual event hosted on Zoom, held January 22 - 24, 2025, from 9 a.m. - Noon PT / 10 a.m. - 1 p.m. MT / 11 a.m. - 2 p.m. CT / Noon - 3 p.m. ET.

Agenda:

Wednesday, January 22, 2025 Please note, all times below are listed in PST.

| 9:00 - 9:15 a.m. |

Workshop Introduction |

Speaker: Ewa Deelman, NSF CI Compass |

| 9:15 - 9:45 a.m. |

Instrument Metadata @ DataCite The most recent version of the DataCite metadata schema released during January 2024 introduced “Instrument” as a resource type for Digital Object Identifiers (DOIs). Instruments can now be identified and connected to the datasets they collect and the research results that depend on them. In addition, the DataCite metadata schema includes many elements that can be used to describe instruments at varying levels of detail. Examples from DataCite will be used to explore how early adopters are using these and results from a community dialog with outline future directions. |

Speaker: Ted Habermann, Metadata Game Changers |

| 9:45 - 10:15 a.m. |

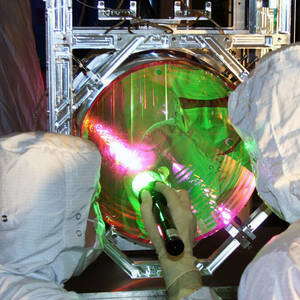

Data Management in High Peak Power Petawatt Laser Experiments at the ZEUS Facility This talk presents an overview of the ZEUS facility and its capabilities, focusing on data lifecycle management for laser wakefield experiments. In our first year, we achieved 1.0 PW and up to 2.4 GeV electron beam energy, with plans to reach 2 PW by March 2024 and 3 PW by September 2026, making ZEUS the highest peak power research facility in the U.S. We'll discuss the data lifecycle from acquisition to dissemination, including the architecture for initial storage. The presentation will highlight problems solved, lessons learned, and current and future challenges. Additionally, we will cover our approach to incorporating user-specific instruments and ensuring that data remains useful and usable throughout its lifecycle as a user facility for high field science. |

Speakers: Franko Bayer and Qing Zhang, Zettawatt-Equivalent Ultrashort Pulse Laser System (ZEUS) |

| 10:15 - 10:45 a.m. |

Just FTP It It’s a simple idea. A machine produces data. Now simply find the data and copy it to a central repository. I’m going to talk about some of the unexpected challenges we had from acquisition to first storage architecture. Of course, we had the usual challenges of transmission reliability, provenance, ownership, etc., but we also encountered a few unexpected challenges that might trigger some commiseration in you, or Schadenfreude if you’d prefer, and might also help you evaluate your next data ingest project from a slightly different perspective. |

Speaker: Chris Bontempi, Networking for Advanced Nuclear Magnetic Resonance (NMR) |

| 10:45 - 11:15 a.m. |

Conducting the Quartet: synchronizing data streams in a custom-built mass spectrometer with four mass analyzers The Ion Cyclotron Resonance (ICR) facility of the National High Magnetic Field Laboratory (MagLab) is home to the highest-performing ICR mass spectrometers in the world. Recently, the flagship 21 tesla instrument was upgraded with a new front end containing an additional mass analyzer, enabling higher performance and new experimental workflows. This presentation will discuss the handling of data streams in this hybrid instrument, including the difficulties of integrating a commercial front-end system with a custom-built ultrahigh field ICR mass spectrometer. |

Speaker: David Butcher, National High Magnetic Field Laboratory (MagLab) |

| 11:15 - 11:30 a.m. |

Wrap Up |

|

Thursday, January 23, 2025 Please note, all times below are listed in PST.

| 9:00 - 9:15 a.m. |

Workshop Introduction |

|

| 9:15 - 9:45 a.m. |

Data Sharing Experiences at NHERI Wave Research Laboratory at Oregon State University This talk presents the best practices for data-sharing for research supported by the National Science Foundation at the Natural Hazards Engineering Research Infrastructure (NHERI) Wave Research Facility at Oregon State University. We will discuss challenges in developing a culture of data-sharing among visiting researchers, how much ‘hand holding’ is too much, commitment to publication after the experiments have concluded, and making data sets that are useful and usable using the DataDepot at DesignSafe.org. |

Speaker: Dan Cox, Natural Hazards Engineering Research Infrastructure (NHERI) Coastal Wave/Surge and Tsunami |

| 9:45 - 10:15 a.m. |

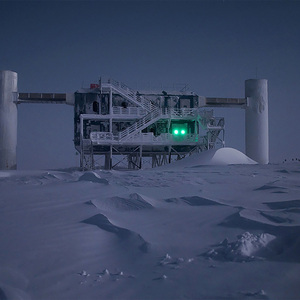

Planes, Satellites, Trucks, Ships, and Fiber Optics - How to manage data coming from the bottom of the world The IceCube Neutrino Observatory is one of the premier facilities to study neutrinos and their origin above 10 GeV. The observatory is located at the geographic South Pole with no continuous network connection. We will show how we move data from the South Pole to the USA and how we manage data once it gets to the USA. Additionally, we will see how we manage the simulated data required to perform data analysis with IceCube. |

Speaker: Benedikt Riedel, IceCube Neutrino Observatory (IceCube) |

| 10:15 - 10:45 a.m. |

Streaming Data for the International Gravitational Wave Detector Network One of the main scientific objectives of the international network of Gravitational-wave detectors is prompt identification and localization of gravitational-wave event candidates in the interest of enabling multi-messenger observation of the astronomical sources of potential events. In this talk I will provide an overview of the ongoing effort to improve the data flow that make such low-latency analysis possible. |

Speaker: Jameson Rollins, Laser Interferometer Gravitational-Wave Observatory (LIGO) |

| 10:45 - 11:15 a.m. |

Vera C. Rubin Observatory Data Management

At 20 TB a night Rubin is big data for optical astronomy. A wide, fast, deep survey providing measurements for ~18 billion stars and ~20 billion galaxies will usher in a new era in astronomy. We will briefly look at the architecture of the data management system and mention some lessons learned. |

Speaker: William O'Mullane, Large Synoptic Survey Telescope (LSST), formerly the Vera C. Rubin Observatory |

| 11:15 - 11:45 a.m. |

OOI - Processing an Ocean of Data This presentation will explore the architecture designed to manage a continuous stream of data for storage and distribution over a 30-year collection period. It will address key challenges such as storage constraints, data discovery, and the need for forward-thinking strategies to accommodate future changes, with a particular focus on the impacts to the OOI (NSF Ocean Observatories Initiative) program mission. |

Speaker: Jeff Glatstein, Ocean Observatories Intiative (OOI) / (Woods Hole Oceanographic Institution) WHOI |

| 11:45 a.m.- Noon |

Wrap Up |

|

Friday, January 24, 2025 Please note, all times below are listed in PST.

| 9:00 - 9:15 a.m. |

Workshop Introduction |

|

| 9:15 - 9:45 a.m. |

Weaving NEON data into the cloud The National Ecological Observatory Network (NEON) is a major infrastructure that supports continental-scale research in long-term drivers and responses within and across ecosystems. With > 16,000 sensors generating > 5.6 billion data points per day, and up to 400 field technicians collecting highly heterogeneous environmental and ecological data as well as physical samples (>100,000 per year) across the United States, NEON relies on numerous innovations in hardware, architecture, and software to manage the incoming data. |

Speaker: Christine Laney, National Ecological Observatory Network (NEON) |

| 9:45 - 10:15 a.m. |

Maintaining sanity while moving, storing, and cataloging terabytes of data and millions of files on a nightly basis Large volumes of data contained in millions of files have become the norm of production in large facilities, which the Daniel K. Inouye Solar Telescope (DKIST) is a member of. The DKIST produces data from a multitude of instruments, creating large amounts of data in both small and large files which number in the millions. Moving said amount of data and files from the telescope to the storage and processing facilities on a regular basis, without data loss and with transmission assurance regardless of transmission channel glitches is basic to fulfilling the facility’s mission. The DKIST utilizes a slew of technologies and a scalable microservices architecture to move, store, inventory, and catalog the data such that the first part of the DKIST mission (retrieve and store) is completed without failure. The architecture and technologies also serve to make the scientific data searchable, discoverable, and easily retrievable by users. |

Robert Tawa, National Solar Observatory, (NSO) |

| 10:15 - 10:45 a.m. |

Ocean Networks Canada’s Data Management and Archival System: Oceans 3.0 Ocean Networks Canada operates cabled ocean observatories on Canada’s three coasts as well mobile and remote platforms on land and sea globally. Oceans 3.0 is our software solution to the entire data lifecycle, from acquisition to storage and distribution. The system has been developed incrementally since 2006, with many lessons learned and problems solved along the way. The diversity of instrumentation and data products supported may be our most difficult challenge as well as a distinguishing feature. |

Ben Biffard, Ocean Networks Canada |

| 10:45 - 11:15 a.m. |

Dishing the Dirt-to-Disk for In-Situ Geophysical Measurements For the past 35 years, the joint efforts of NSF-funded Geodetic and Seismic data facilities has yielded a 50-year treasure trove of site-gathered ground motion measurements for open access to researchers. The process of collecting and processing this data to storage still has many of the same challenges today as it did decades ago, but widely available broadband and commoditization of sensitive technologies has enabled data collection to expand in speed and volume by two to three orders of magnitude with no signs of slowing down. This presentation will highlight the challenges of initial data collection from distributed installation sites, its preparation for archival processes, and the cataloging that makes this data easily available to researchers around the globe. A look at the future of geophysical measurements will also be presented and we will highlight what will be needed to meet the demands of the coming decade. |

Speaker: Robert Casey, EarthScope Consortium, |

| 11:15 - 11:30 a.m. |

Wrap up |

|

|

|